Double-thinking in SQL

One of the first things a novice SQL developer learns about is called thinking in SQL

, which is usually being opposed to procedural thinking

Let's see what part of brain does this intellectual activity take and how to use it.

Two features distinguish SQL from other languages you learned as a 11-year old kid on your first PC, like BASIC or perl or maybe even C++ if you're such a Wunderkind.

First, SQL is set-based. It does things with sets.

Every tool is designed to do things with something else. Like, you use a hammer to do things with nails, or use a screwdriver to do things with screws, or use an oven to do things with food.

Same with computer languages.

BASIC does things with variables. perl does things with scalars, arrays, hashes and file streams. Assembly does things with registers and memory.

You should not be confused by something like registers are just a special case of variables

, or a hash is just a generalized container which exposes this and this method

or something like that. No.

A hash is a hash, a variable is a variable and a register is a register.

Like, an egg is a food and rice is a food and it's possible to cook some eggs in a rice cooker and vice versa, but they are just wrong tools to do that.

Akihabara district in Tokyo City. You can buy any type of gadget you can imagine, which is about 2% of what you will find there.

Prehistoric men had to make do with hammerstones and lithic blades (even to court their women), but now we have a whole district in Tokyo City for gadgets with USB type A, and another district for gadgets with USB type B.

So if you feel the urge to hash something and then make a good old array out of this, you don't use assembly, but perl or PHP instead.

Same with SQL. SQL does things with sets.

It's a tool that allows you to take a dozen or two of sets, mix them together, knead and wedge them then chop them apart and mix again, but the output you get is still a set and all inputs are sets.

Eeverything you do in SQL, you do it on sets. That's why SQL is called a set-oriented language.

Ok, that was the first feature that distinguishes SQL from other languages. What's the second one?

SQL is a declarative language. This means that you express what

you want to do with sets, not how

you want to do it.

This requires a little explanation.

In fact, every language is declarative, even assembly.

When you issue a command like xor eax, eax, you just command your CPU: go warm up your circuits and make it so that I see a zero in EAX right this nanosecond!

.

This command needs to be translated into an opcode, and the opcode should go to the instruction pipeline and dispatched into some circuits and make some transistor to change their state, but it is none of your concern. The language and the CPU abstract all this from you.

In the same way, perl abstracts hashes and arrays, ASP abstracts HTTP requests and responses and Windows API abstracts windows and controls.

SQL, of course, abstracts sets.

Abstractions simplify your life. But an abstraction in computer world usually means one of two things:

- You save some time on brainless operations, like typing or remembering, or

- You save some time on decision making

Brainless part is easy: you just do things human way and make computer to do same things computer way. This is usually a one-to-one mapping.

For instance: xor eax, eax is much easier to remember than 31 C0. That's why we use assembly.

int __stdcall is much easier to remember than pass variables by value in stack, rightmost first, return the result in EAX and clean the stack inside the calling function

. That's why we have calling conventions.

Darling Ctrl-K is much easier to type than something like my spouses really long email address made up by a sysadmin whose head is full of unwarranted self importance at example dot com

. That's why we use address book in Outlook.

These examples don't imply any decision making. There is hardly a way to mess something up here, that's why computers are very good in these things.

Decision part is more complex, and it usually deals with more complex abstractions.

In an x86 CPU there are at least two ways to zero a EAX:

xor eax, eax, andmov eax, 0

The first instruction is more efficient and takes only 2 bytes in code, while the second one is (or at least was) less efficient and takes something about 5 bytes.

The problem is that the first instruction works only on zeros.

Like, for the following C code:

if (myfunc())

return 1;

else

return 0;

, an efficient C compiler should generate different opcodes for different execution paths, despite the return construct being the same. This is where compiler makes a decision: if I need to return a zero in EAX, I'll better use

.xor eax, eax, otherwise I just have no choise but to use mov eax

Note that the code will still work if the compiler makes incorrect decision, it just will be 3 bytes longer and maybe several nanoseconds less efficient.

That's what distinguishes time saving

features from decision making

features.

In the first case, you know in advance how the computer will make something, and if it's doing it wrong way, it's doing it wrong.

In the second case, you know what will it make, but don't know (or don't care) how as long as it's done.

SQL heavily, vastly relies on decisions made by the computer. Probably more than any other widely used language.

There is only one way to translate a xor eax, eax into an opcode. Two ways to compile return 0 in an stdcall function. Probably a dozen ways to decide which dead object should be recycled by a garbage collector.

But for a simplest SELECT query which is only 30 rows long, there are hundreds of decisions to make, and I'm not making this up:

- There are

FULL SCAN,INDEX FAST FULL SCAN,INDEX SKIP SCAN,INDEX RANGE SCANto retrieve the values from the tables - There are

NESTED LOOPS JOIN,MERGE JOINandHASH JOINto join them - There are

NESTED LOOPS SEMI JOIN,MERGE SEMI JOINandHASH SEMI JOINto checkINandEXISTSpredicates - There are

NESTED LOOPS ANTI JOIN,MERGE ANTI JOINandHASH ANTI JOINto checkNOT INandNOT EXISTSpredicates - There are

SORT DISTINCTandHASH AGGREGATEto group the records - There are

LAZY SPOOLandEAGER SPOOLto build temporary indexes for the duration of the query - There are numerous ways to pipeline and parallelize the resultsets to benefit from multiprocessing

- There are bitmap indexes to indicate existence of a record in a memory-efficient way

- There is

CONNECT BY PUMPto keep the recursion stack for recursive queries

etc, etc.

But why so complex?

With a hammer, you drive nails. With SQL, you drive sets.

Image by: Curtis and Judy

As I already said, SQL works on sets.

Sets are very complex things which can take lots of memory and disk resources, and operations on them may be very costly.

And worse than that: there are many operations for which there are no single algorithm of choice

.

For instance, there are 3 widely used JOIN algorithms: NESTED LOOPS JOIN, MERGE JOIN and HASH JOIN.

The first one is best for joining a resultset on a selective indexed field, the second one is best for joining sorted resultsets, and the third one works equally poor for everything, but still exposes the best time when the resultsets are neither filtered nor sorted, but this only applies for joins on equality condition, otherwise it's impossible to use it, et cetera, et cetera.

The whole point of SQL is that it abstracts all this complexity from you. You just write your SELECT * FROM table1 NATURAL JOIN table2 and let the optimizer choose all these, Lord help me, hashes or merges or indexes or whatever.

The first thing any SQL developer should learn is formulating his needs in the terms of sets.

I won't give a lecture of relational algebra here, but there are some basic operations you can do with sets, like joining them, grouping, sorting etc., and by combining these operations, you can do almost everything with sets.

Like with plain algebra: there is addition, and subtraction (which is just a generalized addition), and multiplication (which is a generalized addition too), and so on, and finally we get a proof Fermat's Last Theorem (which is probably is a very, very generalized addition too, but I'm not smart enough to grok it).

So, thinking in SQL

means an ability to formulate your needs in terms of set operations and to tell your database system how exactly you'd like to mutilate your data before it's output to you.

Here's the magic of SQL, kids. You just tell it what you want, and it decides the best way to do it, that's how smart computers are. Thank you for your time, goodbye.

Why, you're still here?

Oh, I finished on thinking

, and my article was called double-thinking

? OK.

Remember I said that SQL decides the best way?

Well, no, it doesn't. And every SQL developer sees it very, very soon.

Columnist Joel Spolsky coined the term Leaky Abstraction

for the situations when the abstracting tool gives you a result in a non-optimal way, or gives no result at all. And SQL is number 2 in his list of examples, right after iterating a two-dimensional array which can cause lots of page faults if done improperly.

He writes:

The SQL language is meant to abstract away the procedural steps that are needed to query a database, instead allowing you to define merely what you want and let the database figure out the procedural steps to query it.

But in some cases, certain SQL queries are thousands of times slower than other logically equivalent queries.

A famous example of this is that some SQL servers are dramatically faster if you specify

where a=b and b=c and a=cthan if you only specifywhere a=b and b=ceven though the result set is the same.You're not supposed to have to care about the procedure, only the specification. But sometimes the abstraction leaks and causes horrible performance and you have to break out the query plan analyzer and study what it did wrong, and figure out how to make your query run faster.

The most interesting thing he mentioned is the query plan analyzer.

Let's return to other languages. Like PHP. PHP abstracts lots of things, but among the most used ones are arrays , HTTP requests and web servers.

In PHP, you just write something like $a[0] = $_SERVER['HTTP_USER_AGENT'] and the string that browser identified itself with in the HTTP request goes to the array.

Ask almost any decent PHP developer on what's going on inside the computer when this command executes, and he or she will most probably shrug and give an answer like It must allocate some memory, probably, then get the string out of Apache, and then, um, put the string into the memory and increase the counter somewhere

.

In other words, they don't know and don't care. But they still are brilliant PHP developers, they can do wonders with arrays and requests.

gcc has an option to output the assembly when you compile a program so you can check how effciently it did its work. But ask any C++ programmer what switch do you use to do that, and 9 of 10 would not be able to remember it. It's just not used frequently.

Knowing the PHP or gcc internals is not required to use these tools efficiently. PHP just works and you can program good PHP all your life without ever knowing how exactly does the PHP interpreter push a function reference to an array of hashes.

And of course no PHP distribution comes with a debugger that shows you what is it going do on each step: look, I'm going to allocate this memory and fill it with zeroes and scan it and put a string here.

However, every decent RDBMS has some mean to preview an execution plan for your query. How exactly will the query work? What algorithm does the query optimizer use? Will it scan table A or table B first?

I know no other tool or language that exposes so much of its internals.

To know what PHP or perl does when allocating an array, you'll have to run it under debugger. To know what .NET does when creating as instance of List is probably a licence violation, a felony and a deadly sin, all at once. Many products just refuse to run under debugger so that their precious internal logic of twice two making a wax candle

would not leak outside.

But when it comes to SQL, Microsoft gives a graphical tool which allows you to see what exactly happens when you run an SQL query. And unlike gcc -s, this tool is used heavily.

They actually made an effort to show you what's going on inside their product. To show, not to hide.

They are exposing exact algorithms used by the server, they are explaining how much time will an operation take, and they are not hiding it in a dusty PDF file: they make it so that you can actually understand it, with icons and arrows and nice charts.

This is of course not because they are so generous and open-hearted and want to contibute their knowledge to a free world. This is because if not for this tool it would be impossible to use their product at all. Stay assured that if they invented an algorithm that would allow generating optimal queries, they would do anything possible and impossible to keep it secret.

Inefficient plans leak through SQL abstraction like superfluid helium.

SQL abstraction leaks like a sieve filled with superfluid helium. And this is not because RDBMS developers are dumb. This is because the thing they are trying to abstract is way too complex to do it efficiently. The possible operations on sets and the intermediate results of these operations are so numerious and multifarious that with current level of technology it's just not possible to write an efficient optimizer, an optimizer as in optimal

.

In SQL world, a correct

query does not mean an optimal

query, which is true for almost tools. But is doesn't even mean a usable

query, since the query plan can be so unoptimal that you will wait forever for a query to complete.

Any decent SQL developer should know everything about execution plans and see in advance how efficient or not efficient they are.

Here is a classical example. Don't worry, it's quite simple.

Say, we need to select all rows from a table along with a running sum:

| id | value | sum |

|---|---|---|

| 1 | 10 | 10 |

| 2 | 20 | 30 |

| 3 | 15 | 45 |

sum here accumulates value added on each step.

In relational algebra, it's super easy to write a query that would make this resultset:

SELECT t1.id, t1.value, SUM(t2.value)

FROM mytable t1

JOIN mytable t2

ON t2.id <= t1.id

GROUP BY

t1.id, t1.value

[/sourcecode]

This reads as <q>for each <code>id</code>, return the sum of <code>value</code> in rows with <code>id</code> less or equal to given.</q>

Quite simple, right?

Wrong.

No <strong>SQL</strong> engine I'm aware of can run this exact query optimally.

An optimal solution would be something like <q>take a row from the table, record the current value of <code>sum</code>, take next <code>value</code>, add it to <code>sum</code>, pipeline the result</q>.

But the optimizers are not programmed to see this algorithm in the query worded as above. Best they can do is something like <q>take a row from the table, then take all other rows with smaller <code>id</code>s, calculate their sum, pipeline the row, then forget the previous result and do it all again, on a larger set of rows</q>. It the <em>best</em> algorithm they can come up with, and I'm not making this up. There are worse algorithms, like <q>select all matching rows, hash them together, calculate the sums and use disk space to keep intermediate results for they don't fit into memory</q>

Of course modern systems can use more efficient queries, like this:

SELECT id, value, SUM(t1.value) OVER (ORDER BY id)

FROM mytable

, but this is different query. An optimizer cannot see that this query is identical to the first one in terms of results. It's the task of an SQL developer to see it and reformulate the query.

Optimizers can efficiently do some simple tasks like picking a correct index, choosing correct access method depending on the filter selectivity and so on. However, it's not enough to allow formulating your queries in SQL so that they are just correct. You still need to formulate your queries so that they are usable, i. e. an optimizer is able to build an efficient plan for them.

That of course means you should know how optimizers work.

In eariler systems, like Oracle before version 8, optimizer had a set of rules. Like, if we see a filtering condition on an indexed field, we use that index, etc. For simple queries, that yielded decent plans, for more complex queries that was a pain.

More advanced optimizers try to predict how much time will one or another plan take. Usually they gather statistics to do that. That is, from time to time they see how the values are distributed along your tables, keep some aggregates, and try to predict the query time.

They sometimes mistake, but this is not the real problem. The real problem is that even the brightest of optimizers cannot reword even the simplest queries. They cannot even change a self-join to a window function in the query above. This still should be done by an SQL developer.

Thinking in SQL

means formulating you needs in terms of set operations

. This is what usually said. And this is truth, but not the whole truth. This is not enough to be a good SQL developer.

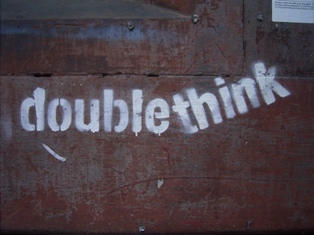

To be a superb SQL developer, you need to doublethink in SQL. This Orwellian term describes the process the best:

Doublethink means the power of holding two contradictory beliefs in one's mind simultaneously, and accepting both of them.

Set is Procedure

Image by: duncan

To be a good SQL developer, you should be able to imagine the query in terms of sets and in terms of algorithms at the same time.

It's not enough to come up with a set operation that yields correct results. It will be inefficient.

It's not enough to come up with a good algorithm that transforms your set as you need and try to force-feed it to the server using procedural approach. The servers are not good at that.

What you need to do is to formulate your query so that the optimizer chooses exactly the algorithm you need. And to do this, you should know both the algorithm and the set operations. You need to doublethink.

This is not always an easy task to do. But it definitely deserves learning.

And only if you learn it you will be able to harness all the power of SQL.

Subscribe in a reader

Subscribe in a reader

Excellent article… I followed your link from stackoverflow.

Mark Harrison

21 Jul 09 at 08:02

Great article!

Alon

28 Jul 09 at 19:45

I’ve been trying to explain this to people for so long!

You’ve arranged everything so clearly from top to bottom, I actually use your analogies now myself.

Great post.

Shlomo

31 Jul 09 at 20:49

Excellent article, found your site today looking for some basic SQL pointers and got totally side tracked, reading lots of your other posts.

Just brilliant,

thanks for sharing your knowledge!

Hans

1 Sep 09 at 13:57

How much of the difference in behavior (show plan versus don’t care) is due to the declarative model, and how much is due to the fact that query execution is really slow.

Are games programmers who are running into performance issues are doing the same as SQL developers?

artsrc

22 Sep 09 at 05:39

Insightful article.

I had myself ran into many situations during which the SQL engine decided upon absurdly inefficient methods at pulling things off– many-to-many joins are one potentially painful example.

Adam Coddington

22 Sep 09 at 09:50

@artsrc: if by “really slow” query execution you mean good algorithms badly implemented, then yes, sometimes it is really a problem with free engines like PostgreSQL and MySQL.

For instance, this article: https://explainextended.com/2009/09/18/not-in-vs-not-exists-vs-left-join-is-null-mysql/ shows same algorithm reimplemented three times in MySQL, one of implementations being less efficient.

SQL Server and Oracle are less prone to this, that is if they came up with a good algorithm, that means it will be as fast as possible. The problem is usually with the algorithm chosen (i. e. the query plan), not with its implementation.

Game gevelopers is a good analogy. However, inserting assembly pieces into code makes your code faster, while inserting custom functions sometimes make it slower, especially in Oracle (googling for “oracle context switch” will show you lots of gory details).

Quassnoi

22 Sep 09 at 09:56

@artscr: and one thing more that matters is that every system is programmed with a set of predefined algorithms and the query optimizers just have to build a Lego castle out of them.

MySQL, for instance, mow, in 2009, is not capable of doing HASH JOINs and MERGE JOINs (which I think were invented by Leonardo da Vinci or about that time). That means that for certain tasks you will never have decent performance, period.

And no engine allows adding algorithms into the query plan.

Quassnoi

22 Sep 09 at 10:07

Great article! Thank you.

Jordan

23 Sep 09 at 01:26

Why anyone continues to bother with MySQL (for anything beyond storing your golf scores or pumpkin pie recipes) is beyond me… Yes, it’s free, but in the end, you get exactly what you pay for! Continue to struggle with that TOY, or invest a few bucks in an enterprise-class DBMS.

Sybase SQLAnywhere.

Paul Horan

23 Sep 09 at 07:41

This is one of the best articles on SQL I have ever read… Very well written and well thought off!

Thanks a ton!

Abe

16 Dec 10 at 05:22

@Abe: you’re welcome!

Quassnoi

16 Dec 10 at 13:21

Fantastic article

Manoj

13 Jun 14 at 12:44

Read the Full Piece of writing

Double-thinking in SQL | EXPLAIN EXTENDED

Read the Full Piece of writing

21 Jul 14 at 22:17

Really really great article. So well structured. I have been thinking about writing a polemical “SQL is your friend” article aimed at my fellow OOP focussed web devs coming from someone who once did *everything* with SQL. If I do it this will be a primary source.

Anyway, one thing that would make this even better would be a worked example of how one might solve the example problems shown here.

Personally, my approach is to try to be pragmatic. As you said, SQL is an abstraction designed to be a time saving device so I don’t want to spend loads of time trying to get my time-saving device to save time.

So if faced with your running sum example:

First I’d notice that I haven’t told it to `order by id`. Without that it won’t reliably give me the result I want although in practice with most engines it will. It so happens that most engines go by the chronological order items were physically created so that could be broken if a record for a certain ID were deleted and re-inserted with the same ID.

So if I order by id perhaps I’ll get an optimal query but I wouldn’t count on it. If I don’t I’ll crack open the explain and look for any possible quick fixes. e.g. ID has a unique index, right? And it’s not a hashmap index? Cool.

If I can’t get an answer relatively fast I’ll accept that I’m asking by database to do something fundamentally not set oriented and instead I’ll ask what parts of the query really are set-oriented and doing heavy lifting.

In this simple example there are not other parts but more commonly in the real world I’ll probably also aggregating or calculating the second column, getting context from related tables and using a `where` clause to filter. So unless I have a compelling reason to keep the whole thing pure SQL (e.g. I want to maintain an elegant network of views) and assuming I’m already doing this query as part of a routine in a more general language like ruby or java I’ll do the heavy lifting in SQL but add the running sum column outside of that.

It may be a little frustrating to feel close but not quite finding the magic formula but I think this is all part of maintaining a positive attitude toward SQL. I mean, people say “I hate SQL because it’s unpredictable, makes hard to understand decisions, etc…”. I say take the massive good stuff it offers be easygoing about the rest. Like you, I think that it’s attempting to do a really hard thing and delivering the goods most of the time. Every time it delivers it has saved all sorts of trouble I could have painstakingly figure out myself and covered tonnes of edge cases I haven’t noticed.

Start the queries simple, build up bit by bit seeing what it can handle, be kind about where its breaking points are. It’s like your genius productive but temperamental assistant. If it gets upset about that fifth join play sympathetic therapist for a short while but don’t spend ages and don’t be authoritarian. If SQL and that fifth join hate each other don’t force them to try being friends at all costs.

Adam

7 Apr 17 at 17:10

are you sure it set-based and not multiset/bag based

Serge

30 Apr 18 at 06:16

Just wanted to point out: cooking eggs in rice cooker isn’t at all a bad choice of tool. A LOT of Asian countries do that.

Philp

5 Jul 23 at 21:43

I’m sure they do. You can totally use a rice cooker to boil eggs.

When I was working in an office with a kitchen that had nothing but a microwave oven in it, I boiled eggs in the microwave oven. The secret is to wrap them in tin foil and put into a bowl of salt water.

Then I bought a used egg steamer off a classified ad site for $5 and stopped boiling eggs in the microwave oven.

Quassnoi

5 Jul 23 at 22:56